This is a two-part post, that takes on the issue of measuring the value from implementing Dynamo + Revit based workflows for Architecture, Engineering and Construction (AEC) companies. The first part discusses broadly how companies should group their Dynamo scripts in terms of measuring the value these scripts create and what specific metrics can be obtained. The second part will be a technical post, detailing the process of implementing usage statistics tracking for your Dynamo scripts.

Visual programming frameworks such as Dynamo or Grasshopper aren’t really that new, they have been around for some time now. Users have been playing around with these tools, producing really interesting results – from efficiency increasing tools to various quality checking solutions, creating complex geometries and countless other things. The spectrum of what can be achieved with these tools is quite difficult to summarize in brief form. This aspect might also be partially contributing to the scattered results achieved from implementing Revit + Dynamo based workflows inside Architecture, Engineering and Construction (AEC) sector companies. The excessive options can be overwhelming as it becomes difficult to prioritise what to focus on and what to skip altogether.

Regardless of what Dynamo is selected to be used for, it is important to not forget the business aspects. AEC companies, in particular, design consultancies, focus on maximising their billable hours. Therefore, developing some Dynamo tools could be seen as a resource wasting effort. It is important to take well-controlled steps in this process and clearly communicate the value that these developed solutions will bring to the project or company as a whole. But this is where it can get a little bit tricky, what type of value will this bring to the company? What is the actual metric? How do you quantify it?

While these questions might not always be a priority for those creating Dynamo scripts for your engineers and architects, it is different for the managers. Being able to provide some form of quantifiable metrics of the added value will go a long way in greenlighting various future innovative solutions be it BIM, VDC related or general digitalization efforts for your specific consultancy. Furthermore, it can help streamline the overall innovation strategy within your consultancy.

Before trying to measure the value created by computational BIM workflows it is important to identify what the purpose will be of those Dynamo scripts. For simplicity, we’ll broadly group all possible scripts into one of three categories:

- Tools

for improved BIM modelling - Tools

for improved BIM management - Tools

that enable new design capabilities for projects

We’ve selected this way of grouping Dynamo tools to differentiate in terms of how easy or difficult it is to obtain quantifiable metrics for measuring value. Another way to perceive these groups is through their aims. Dynamo scripts deployed to enhance and automate certain Revit modelling tasks are inevitably aimed at improving overall productivity, the efficiency of design teams with the final outcome being reduced hours spent on certain tasks. This group is primarily aimed at improved efficiency. The second group deals more with automation tools for quality control of Revit or any BIM model in general. The goal is often to both decrease the time it takes to carry out these checks but more on finding issues both better and sooner than through manual efforts. Lastly, when dealing with tools that enable new options altogether, it is tricky to predict what benefits that can bring, as it has the potential for significant benefits in both productivity and project quality aspects.

The following figure is here to relatively illustrate the potential in improving the efficiency and quality of BIM projects based on these categories. It is all relative, even risk in developing these tools. When dealing with efficiency-boosting tool development, the largest risk is that it might not be possible to fully tailor the Dynamo script for the project, thus wasting time and reducing the time to complete the project which has to be done either way. For tools focused on quality improvements, the risk is slightly lower due to separation from the modelling work. If the script development fails to deliver, some form of self-control would undoubtedly have been present during modelling either way, so the project should be completed on time, though might contain slightly more mistakes. Dealing with tools extending Revit capabilities or providing some sort of generative design solutions, the risk is usually the greatest, as it is unclear beforehand, what gains will be achieved for your team’s efficiency and project quality. The potential is also the greatest but so is the time necessary to properly implement computational BIM solutions.

Evaluating the value of improved BIM modelling

The simplest way to measure the value created by a Dynamo solution for scripts that fall into this category is to record the work hours saved company-wide or on a project. This would require counting the number of times the specific Dynamo script was used, how long it takes to use it and the more difficult task of comparing with a certain benchmark. This benchmark can be the time it takes to manually carry out the task, which can be somewhat subjective depending on how one approaches it. Say there are 10 engineers creating reinforced concrete models in Revit, each one will likely take a slightly different approach to model rebar for say a bridge beam. That will undoubtedly translate into time differences between each one, not to mention the countless other factors that will affect the time it takes to produce one fully reinforced bridge beam model. These things must be considered carefully, as the outcome would later determine how useful was the time investment in creating a certain Dynamo tool, such as automating the beam reinforcement to company standards.

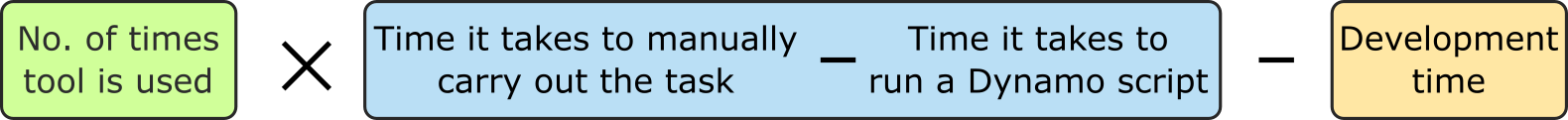

Let’s examine a few examples of Dynamo based tools for Revit reinforcement. Let’s imagine a Dynamo script that is meant to automatically generate the reinforcement bars of the bridge beam we mentioned just prior. The idea would be to enable the user to select the beam in question, run the script, prompt a simple user interface for the user to enter some preferred inputs and place the rebars within the Revit element. The benchmark for such a case would be the time it takes to do this task manually. Hence, the value added from such a script would be the architect/engineer hours saved minus the initial development time.

Now, let’s say it takes someone 4 hours on average to produce a rebar model manually and the scenario is relatively infrequent, maybe once a month, so 12 times a year. A Dynamo solution development time is 60 hours. And the script ends up rather complex, taking 15 minutes to run (yes, that would be excessive). In this scenario, we would not be creating any value for the company within the first year, as the time saved for each run is 3 hours and 45 minutes (3.75 in decimal). Which times 12 runs equates to 45 hours. After we factor in the development time, we get 45 – 60 = -15 hours. The company actually suffered losses for this work.

But surely, the value proposition will become during the second year!? The value proposition of the tool is not static, it is dynamic. For a long enough timeframe, any tool can look like a good idea. However, this does not always work out that way in reality. Certain nuances in terms of script maintenance and support added software capabilities over time could replace the developed tool in a year or two (or never). The truth is that we just don’t really know what the future holds. Therefore, our advice for architecture, engineering consultancies would be to establish a clear end of life term for the developed tools. As an example, that could be 2 years. If a tool does not begin to add value within this period, it should be deprioritised or left for R&D teams that are not tied down by the need to maximise billable hours.

Another quick example – a Dynamo tool for placing annotations, tags within Revit views automatically. A very simple task, a person doing it manually would take just 10 minutes. However, this needs to be done thousands of times per year companywide. Let’s assume that this needs to be done 2500 times per year. The resulting script runs in 30 seconds, development time was around 80 hours. Such a script would save the company hundreds of hours: (10mins – 30 secs) x 2500 – 80 hours = 355 hours and 50 minutes of saved hours per year. The added value is clear in this case. However, it is probable that such a tool would not have been prioritised early on. It appears to be relatively simple and not worthwhile to pursue until one realises how repetitive it is. The point is to always prioritise gains in productivity for tools that fall under the presently discussed category. Moreover, always interrogate the initial automation idea to identify clear warning signs, particularly for simple tasks that can be easily overlooked.

Now that we’ve analysed some nice theoretical situations, reality can be a bit more complicated. It will not always be so easy to evaluate if the developed tool was actually worthwhile to pursue the development of. Some users will only use the script every other time, others will completely refuse to use it and try to keep on doing things manually, after all, no one is going to place “Dynamo Police” behind everyone’s backs and force the usage of these tools. Then, there will be times a task is slightly altered from the initial constraints the tool was developed for and, thus, ends up not compatible with the problem at hand. The actual created value will be less than expected from such simple estimations as shown here. In some cases, the tool might need to be written off as a “learning experience”. But how will one know in the end?

As mentioned in the introduction of this post, the safest way to ensure accurate measurements is to record the actual usage data of the developed computational BIM tools and constantly compare them against benchmarks. Since we are talking about the benefits and value of BIM automation tools, it would obviously make the most sense to implement the usage tracking aspects within these Dynamo scripts in a form of a Python Node and, lastly, store the collected data in some SQL database or text logs. The larger the consultancy, number of persons using the implemented Revit + Dynamo based workflow solutions, the more sense it makes to automate the process through databases and dashboards such as Power BI. User information, such as machine id, software version, project file names, exact times of running the script and other information could be recorded. All this information would later provide beneficial insights not only for quantifying the delivered value, performance evaluations but also enable better-informed decisions in the future when it comes to workflow implementations and tool development for large offices.

I’ll paraphrase an important thought I’ve heard recently on workflow implementation for large AEC consultancies:

It is one thing to implement design automation for a single team but it’s a whole different thing transitioning to company-wide adoption of developed tools and workflows.

Having data readily available from Dynamo based workflow implementation efforts has the potential to ease this process.

In general, measuring the efficiency in hours saved is the easiest metric to go for as it can be simply translated into financial data, numbers for management to understand and appreciate. Hence, even if Dynamo will be used for other purposes, efficiency criteria should never be neglected if possible, to measure.

Evaluating the value of

improved BIM management

This category mostly deals with various tools for BIM project checking, data analytics, such as Revit model auditing, checks for conformity to company / project standards. Revit parameter management, view and sheet checking etc. It is more difficult to quantify the metrics on how much value such tools add. Same as before, one way is to go through the efficiency prism, but this plays second role to the core purpose of tools in this category. That purpose relates to increasing project quality and ensuring its consistency. Quality is of course highly subjective and very difficult to measure directly.

One metric that some companies track is the amount of rework required on their projects, it can be relatively easily converted into time metrics for financial data. Mistakes that were caught and corrected on time can provide huge financial benefits in the end, yet it is hard to have an objective comparison of how much improvement specific Dynamo scripts provided. Since if the project in question has an automated quality checking workflow based on Dynamo for Revit models, it is already too late to see how many mistakes there would be had no automated quality checks been used. And vice versa. The only option is to compare against previous experiences. Now to say, how much a certain avoided mistake will save your consultancy money is something only your company could guess with any reasonable accuracy.

Lately, BIM data analytics solutions have become often sought after. On the one hand, it brings clarity and simplicity of understanding BIM models and the modern design process for project managers and other interested parties, on the other, they necessitate constant maintenance and dedicated people to manage the system itself and make sure the data collection is working as intended. Measuring how much actual value such solutions provide is highly complex and subjective. Again, if there is a way to convert the metrics into some form of time metric, then it should be prioritised. The insights obtained from various data visualisation dashboards such as Power BI can be invaluable, literally. Even when your consultancy manages to notice new patterns, to make important observations, that does not automatically translate into something sort of value creation for the company. That information must be used somewhere down the design process and only then would it likely be clearer as to what sort of benefits were obtained in the final outcome – the deliver project / completed object.

Alternatively, a derivative metric could be substituted for time metrics. As an example, let’s consider the previous automated bridge beam reinforcement script. The existence of such a script would likely ensure design consistency within the engineering consultancy, as all bridge beams would be standardized as well as reinforcement layouts. Standardisation leads to simpler checks, less mistakes and consistent results, mitigated unnecessary risks. That is rather difficult to measure, but what could be measured are things like reduction of used reinforcement volume, reduced number of complicated rebar shapes.

Imposing questions for self-control can also serve as a form of measurement of value. Will this computational design tool provide new insight/data that was not available before? Will it reduce uncertainty? Will benefits cascade to other areas (e.g. standardized automatic reinforcement, means drawings can be annotated faster, and be clearer)? Many more questions can be asked, but the thought I am trying to convey, if a direct measurement is not obtainable, ask questions, if the answer is yes, check if something can be quantified there and keep going until you identify something with clear metrics for determining the value proposition of the tool.

Evaluating the value of

new design capabilities

It is extremely difficult to estimate how much value one such Dynamo, Revit API solution would bring. If the tool in question enables something that was not possible or extremely complicated to do manually and now your consultancy has the capability to do more than before, how do you quantify the value of that? As an example, we could take some highly complicated reinforced concrete geometry which would also need to be reinforced. While Revit can be utilized to great effect for structural rebar BIM models, there are just numerous cases where the default tools are not sufficient enough to model something, at least not in a timely manner. With a bit of Dynamo, it will likely not be much of a problem.

So, returning to the value aspects, being able to do something your architecture/engineering consultancy was not able to do before could open up new doors, potentially helping with future tendering and gaining new contracts. What is a new contract worth to your consultancy? It does sound highly lucrative. However, the risk is also very great when investing in the development of such tools. Particularly if your teams lack past experience. What if your consultancy gets the winning bid on a BIM contract obliging drawingless project deliveries, fully reinforced concrete models? And the basis on which your bid was made that your teams will be able to develop some sort of a custom automation solution to help deliver the project on time. Now, what happens if your teams fail to deliver and the solution does not work? The risks are difficult to manage, hence these scenarios are rather scarce, as companies try to avoid risk at all costs. But such tools will always have a high risk/reward associated with them.

One other example of such tools would be the now popular generative design solutions, or technically applying any form of optioneering, problem optimization to obtain better-informed results. Which can be architectural massings, structural models that satisfy a whole array of conflicting requirements in an optimized way. The value of such tools comes down to whether a better outcome can be obtained during the same time as compared to manual efforts. Extremely complicated to measure.

Taking optioneering as an example, say instead of a single design option we generate 12 options in a fraction of the time. Does this mean that the decision should be made from just these 12 options? Well, no, if there is still time more options are desired. Hence there is a tendency with generative design, optioneering and optimisation solutions to fill up all the allotted time (or with most decision-making processes), meaning that expecting a reduction in specialist hours is not sensible. However, overall better design is reasonable to expect but not guaranteed to happen. Tracking metrics for value proposition, in this case, depends on your consultancy’s client’s needs. As they will form most of the criteria for any optimisation problem. The value comes from how much better a design your design teams can provide to your client (which will directly or indirectly revolve around expenses/income generation).

Quantifying the value of computation BIM design for consultants is not trivial. It inherently contains significant risks, since in many cases it will pay off only when it gets the consultancy new projects, clients that it would not obtain otherwise. Looking from another perspective, if we in the AEC industry fully adopt and transition to integrated project delivery, gaining value from such tools and measuring it will become easier in part due to shared risk and rewards. But there’s still plenty of obstacles in this path.

Summary

As discussed in this post, if a company wants to control the process of automated workflow implementation, it should resort to some form of metric collection and evaluation. The various possible computational BIM tools were grouped into three categories, based on whether they focus on increasing the productivity of your teams, improving the overall project quality or enabling new capabilities for your design teams and consultancy in general. Identifying early on, the type of problem the tools aim to address will help to understand what sort of metrics to collect for value analysis.

Simplest scenario is to focus on counting the specialist hours saved by automating BIM modelling tasks (e.g. Revit reinforcement generation, pedestrian steel truss bridge generation, etc.) over manually carrying out these tasks. The other option is more complicated to aim for derivative metrics when dealing with BIM management process enhancing tools, quality checks. If these checks can reduce the number of possible mistakes, identify them early on and make corrections on time, reduce the amount of wasted reinforcement, other design conflicts, then that can be measured to a degree and evaluated. Lastly, generative design solutions and overall tools that extend present capabilities of your design teams, will be the most difficult to evaluate. Since the risks are quite high and the rewards not certain, it is best to approach these issues with great care and or try to include multiple parties into the decision-making process. As the value proposition might not be directly visible to the design consultancy but apparent to the client or contractor.

In general, prioritise efficiency first, measure the time saved by these solutions but keep an eye out for innovation opportunities in the future. A tool not worth pursuing today might be necessary tomorrow.

The ideas presented here are not straightforward answers and serve more like guidelines. Hopefully, this post is still relevant today and will promote the necessary discussions in your teams or just provide food for thought for yourself. It is easy to lose sight of the big picture and not notice that automating certain BIM modelling tasks might not be a positive gain for the company, even though it might be an excellent learning experience or a great tool to have. Without having some form of metrics, benchmarks or data collection for analytics, it might be difficult to get your ideas across to company management or to streamline the company-wide automated workflow implementation process.

The second part of this post provides a detailed example of how to implement relatively simple statistics tracking of your Dynamo scripts for Revit projects. You can read it here – How to record dynamo for Revit usage data into an SQL database.

Have any questions about the topics addressed in this post or would like to consult with us on some other topic, drop us an email at info@invokeshift.com or fill out the contact form below.

Contact Us

If you would like to get in touch with us, you can do so by filling the form below and we will get back to you as soon as possible. Alternatively, you can email us directly at: