I’ve been stuck writing this for quite a while now. The overwhelming nature of the topic, how much can be discussed about it has kept me from finishing it earlier. Luckily for me, a few wonderful articles I’ve read recently gave the needed motivation, not to mention making it easier for me to write this opinion piece. These articles are – Generative Design is Doomed to Fail by Daniel Davis and – Is Generative Design Doomed to Fail? by Bill Allen from EvolveLab. I really recommend reading both, if not before then right after this article.

A disclaimer – this is an opinion piece. It will mostly reflect my personal opinion, as a researcher and engineer, on Generative Design (GD), the marketing talk, the hype surrounding it, the complexity of real projects, Computational Design, BIM, and overall view towards innovation in Architecture, Engineering and Construction (AEC) sector. You’ll likely notice that in some areas it might seem like I’m contradicting myself, and that may very well be true. That’s because I have mixed feelings about some aspects of Generative Design, or the hype surrounding it to be more precise. All in all, I will try to refrain from too much bias where appropriate but it’s inevitable there will still be plenty of that here.

This article will be quite long, even compared to the typical ones I write. Therefore, I’ve provided a table of contents to skip to whichever part you find interesting, though continuity of the text will be interrupted that way. I will summarize my key thoughts at the end of this article. If that’s all you’re here for, you’re welcome to skip the entire article.

Daniel Davis broke down his opinion into 6 points, which Bill Allen retained in his article. I will approach this slightly differently, as I want to explore the subject from slightly different perspectives. Nonetheless, I’ll touch on those 6 points in one way or another throughout the text.

Contents

- Is Generative Design really that innovative?

- Challenges with the present project structure and design workflow

- Generative design – a shift in the AEC design process?

- It’s not just about goals and constraints

- The real constraints in AEC are time and resources

- Effects of Generative Design on creativity

- The scale and scope of the problem is critical for the success of GD

- Seamless integration of Generative Design in the project workflow

- Where Generative Design has the most potential to shine

- What’s next?

- Summary

- References

Is Generative Design really that innovative?

To start off, what’s so innovative about GD? Sure, it’s cool, with carefully chosen examples can look like something from a sci-fi movie. But when you deconstruct it, at its core, it is usually some form of an evolutionary algorithm that takes input variable ranges, executes the evaluator, explores the design space for optimal solutions and presents a multitude of possible solutions that satisfy the initially provided criteria.

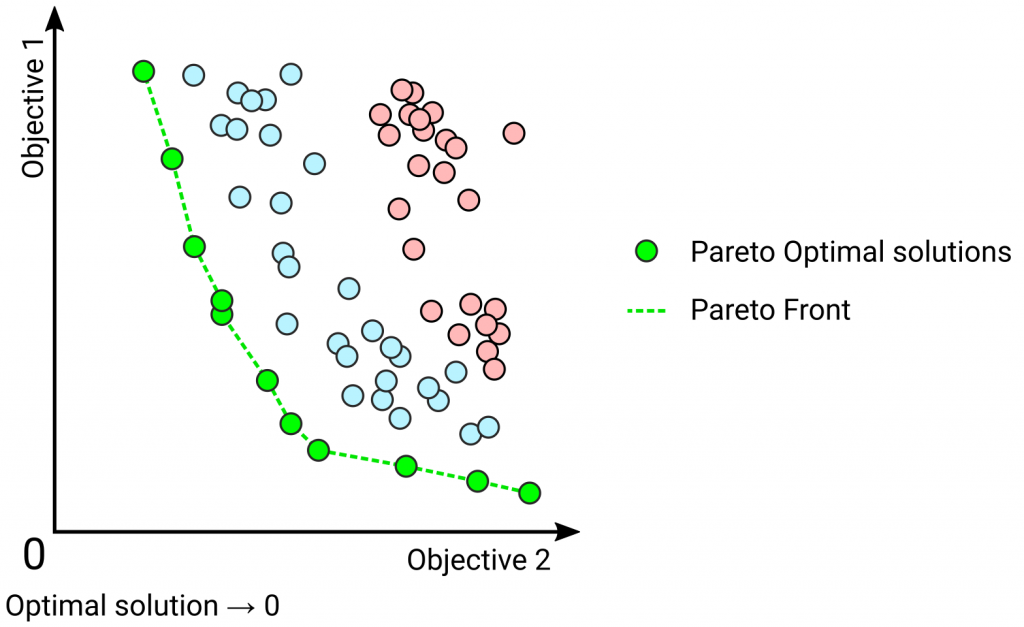

The evolutionary algorithm in question is usually a genetic algorithm (the most popular one – NSGA-II). In general, evolutionary algos have been around for decades, while the NSGA-II, specifically, since 2002. That algorithm isn’t even the fastest one for optimization as it belongs to a group of global optimization algorithms that are slower than local search algorithms. Local search algorithms, without being too technical, are, subjectively, mathematically more sound methods of finding a viable design option within a certain region of the design space defined by some variable’s combinations. The viable solution is not guaranteed to be the global optimum. To put it more simply, a Genetic Algorithm is far better suited at exploring the entire variable spectrum to find viable solutions, but it will take significantly more iterations to find these optimal solutions. Which is exactly what GD is doing, presenting the user with an array of possible options, where all options are considered optimal, hence forming a Pareto front.

So, in a form, it is just a glorified optimization toolbox/framework?! … That’s what I will try to explore through the various sections of this article.

Moving away from AEC, if we look into automotive and aerospace sectors (or overall mechanical design), we can see that Generative Design is not uncommon (while still not completely mainstream and inseparable from the workflows of those sectors). However, it often is approached through topology optimization which has also been around since the last century. Topology optimization is particularly beneficial for additive manufacturing, optimizing the shape of some part, reducing the material needed to produce something, while maintaining similar mechanical performance. This makes a lot of sense in terms of cost reduction and speed for that process.

One of the breaks slowing down the pace of adoption was the available computing power to an engineer back then. There is a certain threshold when passed, it enables rapid adoption of technologies. I believe that increase in computation capacity was the trigger for the mechanical world. Just to note, this doesn’t appear to have had the same effect for AEC.

Another aspect of Generative Design application within the mechanical world is the relatively similar problems they are applied on. The complexity of problems tackled is growing, but the types of problems are pretty much from the same categories. It didn’t replace the entire mechanical design process. Generative Design is just another tool in a very large toolbox for design space exploration.

There are two more things I want to introduce as I will refer to them later. Multi-Objective Optimization (MOO) and Multidisciplinary Design Optimization (MDO). They are not the same. Simply put, the former refers to finding an optimal solution when dealing with several or more objectives (like the NSGA-II algo), whereas the latter deals with finding optimal solutions when two or more disciplines are involved.

MDO is a well-known concept in the aerospace sector. What it tries to achieve is to get an overall better-integrated approach to how aircraft design is approached, moving from sequential to parallel or simultaneous design when dealing with distinct disciplines such as structures, aerodynamics, costs, etc. Sounds a lot like what we’re trying to achieve with BIM (and ISO 19650 specifically) in the AEC, doesn’t it? It’s not quite that due to the natural differences between aerospace and AEC sector design process, project deliveries, etc. Nonetheless, I feel it’s worth bringing such concepts up in this article because Generative Design in AEC appears to be facing similar challenges, that is, if it really wants to be considered as a disruptive technology, a new way of thinking about design (a new paradigm?) as it presently is being hyped up to be.

Now, to answer my own question in the section title – no, I don’t think it is innovation per se (surely not disruptive), as the concept has been around for a while in various forms, called slightly differently over time. The present iteration is more mature, refined and way more user friendly with an overall better user experience. And this is important! If the threshold for entry can be lowered then more people will get involved, meaning more minds and more viewpoints to shape the technology, in this case, GD.

Challenges with the present project structure and design workflow

Yes, this article has a lengthy introduction. In order for me to explain a few of the arguments later on, I need to try and explain the current structure of projects. Moreover, at the same time explore the views shared by Daniel Davis. I find point 5 (“Designers don’t work like this”) of Daniel Davis’ article I can relate to. The entire design process of a building, bridge or some industrial plant is a highly iterative process. One that cannot be defined by only decision-making processes, since they have to be materialized in specific structured ways and formats.

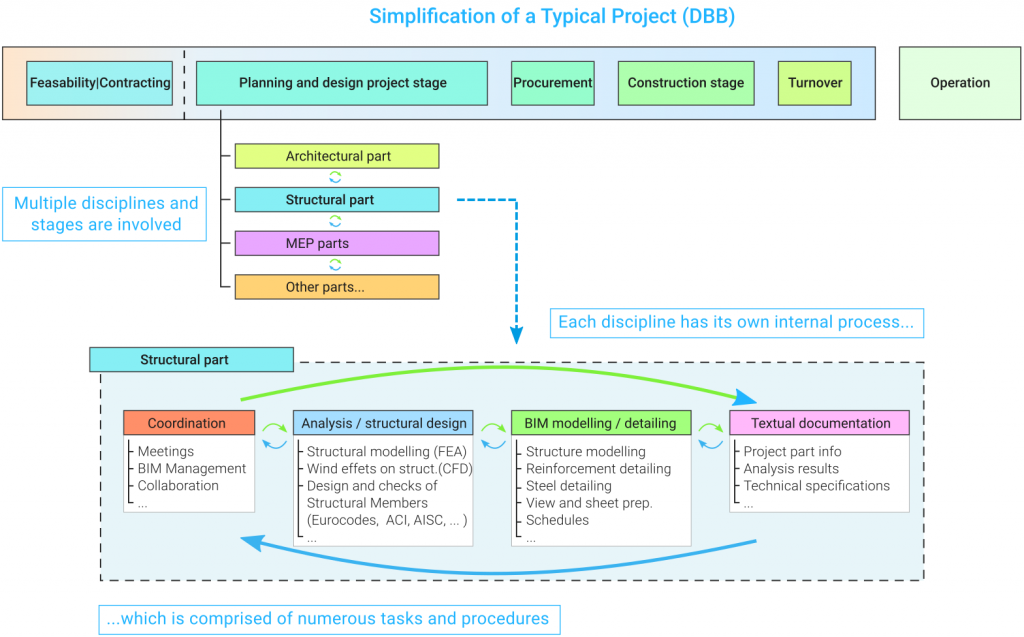

I will take one example of project delivery, the most popular one, the Design-Bid-Build case. While it does differ somewhat between countries, private/public projects, it still remains to be the most common project delivery type in the world and will stay that way in the nearest future.

Below you’ll find a greatly oversimplified project structure. I separated the Operations away from the rest as it would only get more complicated in later sections to write about all the aspects related to that. Another thing I’m separating is the contracting part, as that is highly dependent on country specifics. The remaining bulk of the project is comprised of Planning and Design, Procurement, Construction and Turnover.

Expanding just the Planning and Design stage, we get numerous disciplines that are needed to deliver the project design. The three (or should I say 5?) most common ones include Architecture, Structure, MEP. Depending on projects there could also be other expertise requirements. Also depending on the project type, is it residential/commercial or infrastructure/industrial, the lead appointed party (lead consultant) could either be an architectural or engineering consultancy. Regardless, the expertise from all these fields must come together into one whole solution, a project for the procurement process to commence. This, in practice, revolves around constant feedback and iteration of the project, as all disciplines, while relatively sequential, depend on one another to deliver a quality solution.

Taking the structural part, for example, and expanding it further we can see that it has its own internal process of how things get done. That process is slightly different from company to company, as each has their own workflows, whether they are well documented or a force of habit, some process is always there. That process is again comprised of numerous tasks that fall into various categories. These tasks range from thought intensive to very mundane and are dispersed throughout all stages of the design process. Thought intensive tasks can include collaboration meetings, solving conflicts between disciplines, creating analytical models for structural analysis, analysing the results of Finite Element Analysis (FEA). Other tasks while highly mundane are still necessary to the successful materialization of the project, these include summarising analysis results in Word, preparing technical specifications, exporting and checking various schedules, etc.

All these steps are at need of continuous tracking and modification throughout the entire project delivery cycle. For example, a change in the placement of a few columns by the architect can lead to requirements for the engineers to modify the analytical model, recalculate the loads, new bending moments, forces for beams, columns, slabs, redesign elements, modify the BIM models, schedules, text documents, etc. All while maintaining contact with architects and MEP guys, as there will be modifications in their parts as well.

As Daniel Davis noted, the design is not a linear process. Even if we had to work alone in our own fields of expertise, there are numerous tasks that are iterative in nature.

At present, I also don’t see how Generative Design can replace most of these tasks, without getting the industry to transition to more integrated project delivery methods. Which is happening, albeit very slowly, in some countries. Nonetheless, some compromises would need to be made to fit GD in a meaningful way.

Another issue is that at present, GD is stuck on relatively isolated tasks with minimal multidisciplinary considerations and even those appear to mostly be aimed at conceptual design (decision-making processes). This will be covered a bit in its own section.

What is the takeaway from this section? Design isn’t as simple as it may appear, it is a highly iterative and interdependent process. Generative Design will not replace that process any time soon or if ever. However, where it can shine is the decision-making processes, the most effective of which are made very early in the design process. The present examples of GD in AEC are usually related to architectural schematic design. The problem is, how do you get out of this narrow window and expand into other stages, both earlier and later ones? The most critical decision-making is, arguably, made in the Predesign stages. In the case of public projects, such as infrastructure, many things could already be predestined in the call for bids for design services brief.

Generative design – a shift in the AEC design process?

I’ve separated this into its own section to elaborate a bit more on where I think GD stands in terms of technology and why it cannot be considered a disruptive technology, or even transformational. At least not in the short term. I think it can be categorized as sustaining technology, that is more incremental in nature. Which brings me to another wonderful and brief article by Paul Wintour of Parametric Monkey, called “Understanding innovation”. I highly recommend finding 5 minutes to go through it, it is very concise and to the point (unlike the present article you’re reading). In addition, Paul has released a second part “Identifying innovation opportunities” that is also a worthwhile read. I feel they are both quite down to earth and easy to grasp.

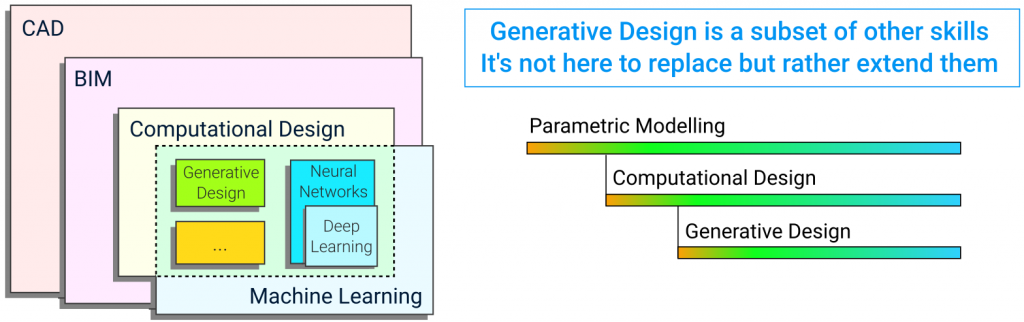

Generative Design should not be considered a major shift in how design is carried out. I’ve seen, and likely so have you, multiple presentations online and articles, where GD is presented in the following format: CAD > BIM > Computational Design > Generative Design. I consider this only partially true.

The style in which it is often presented, makes it seem as if it stands to be a new paradigm in design. Which I do not agree with at present. The way I see it, GD is nested too deeply inside other areas, knowledge and skills to actually become definitive of how design will be done in the future. I’ve already gone over the design process, what it consists of, from numerous meetings to various documents, permissions, etc that need to be taken care outside of all the “graphical” parts.

The figure below represents two things, on the left side – it shows how some technologies are nested into one another, while on the right – how GD requires other knowledge, depends on other skills. While some might not agree with my decision to include BIM into CAD, but ultimately, Computer-Aided Design (not drafting in this instance), is the enabler of the BIM technology. Both of these differ from the other ones nested inside that they can be considered “environments” that facilitate all other actions, objects that originate from the design process. Hence, they can be considered transformative in how they affected the AEC industry, moving it forward to where we are today.

Taking BIM as an example, initially, it couldn’t have been considered disruptive/transformative, it was severely lacking by today’s standards. It took time for them to evolve and for the transition to happen. Jump to present and we have widespread adoption, even international standards such as ISO 19650. It has taken substantial time to evolve. But surprisingly it isn’t all too distant from the CAD-based design process, besides the better handling of data and collaboration. One of the reasons for that I would argue is that both avoided clashing with the various regulations set in place for AEC projects, lack of which we surely don’t face. GD, on the other hand, does not really provide an environment as of yet and I am not sure if it will ever get there. But maybe…

Another aspect to note – that it requires a certain skill set before venturing into Generative Design. To keep it consistent with the other articles and information floating about and around, I’ll note the same two key ones, Parametric Modelling and Computational Design. The latter of them is also a subset of the former. So, we can say GD is basically nested two levels deep.

Skills such as Parametric Modelling are relatively widespread since the parametric notion is present in all major BIM software packages, such as Tekla, Revit, ArchiCAD, Allplan, etc. However, the paradox is that most real-life projects end up with BIM models that use very little Parametrical Modelling. Most geometry is modelled fully manually, without wasting too much effort in defining parametric relationships, unless the software automatically manages to take some of that away from the user’s manual effort. And this is fine for delivering a project in the present project structure and workflow context. Creating dumb geometry is more often perfectly suitable than we, BIM people, would like to admit.

Computational Design has made circles all over the world and pretty much all mid to large companies are in on it at some level. Nevertheless, it is difficult to say with confidence that it has gone mainstream. Workflows are being deployed, BIM projects are automated to increase efficiency, various use cases exist. I firmly believe in Computational Design based workflows with tools such as Dynamo for Revit. Though, I refrain from calling it disruptive (am pretty sure I haven’t included that word in any of my articles or posts before but don’t hold me to that). It will not completely replace the present design process but works very well to enhance and enrich it.

I believe the same applies to Generative Design, because at the core, it is just another tool in the designer’s toolbox to assist in the delivery of a quality project. Moreover, it needs to evolve on its own to become less dependent on Parametric Modelling and Computational Design skills. Right now there are two groups of solutions in AEC, some are their own distinct tools, dedicated to highly specific applications, the other group is more versatile but face more of the issues I’m sharing in this post.

Bill Allen has shared a few examples of developed GD tools like testfit.io and hypar.io in his post so I will not go over them but reshare the links instead. Just advice keeping up to speed with their progress.

In the other group, I’d put Rhinoceros + Grasshopper + Galapagos/Octopus/Design Space Exploration and Revit + Dynamo + Project Refinery from Autodesk (now called Generative Design for Revit and is included in Revit 2021). Both are quite different implementations of GD compared to the previously mentioned ones. The core aspect is that they enable the user to pretty much define any problem that they desire with visual scripting through Grasshopper or Dynamo and interface with their host applications, such as Rhino and Revit to actually deliver something from GD that goes straight into the standard environment AEC BIM projects can be found in. But I will get back to that in a later section. By the way, those who are more interested in the Grasshopper alternatives for GD, as there are quite a few of them, should have a look at Free Generative Design – A brief overview of tools created by the Grasshopper community by Nathan Miller from proving ground.

To wrap this section up, GD is a long way from being considered disruptive tech. It doesn’t really satisfy the criteria to be considered a new way to approach AEC design. It remains in tool territory as opposed to BIM that is now an environment to facilitate all other necessary design activities.

It’s not just about goals and constraints

Many of the GD articles floating around seem to highlight this aspect, that now you need to think of design in terms of goals and constraints that define them, that you just have to explore your obtained designs, optimize and, well, basically that’s it. This way of thinking about GD is not honest or truthful. There are two aspects that I want to touch on here. One relates to what Daniel Davis wrote in his point 1, that “In reality, the designer is also responsible for creating the algorithm that generates the plans”. The other is my own, that thinking in terms of goals and constraints is what both engineers and architects have been doing since… well, always.

On to the first one. Neglecting to mention that Generative Design doesn’t just apply itself to a problem, nor that it has any understanding by default of what that problem is, I assume, stems from marketing decisions. The desire to oversimplify something to make it more appealing. Unless you are using tools such as the previously mentioned ones (testfit.io, hypar.io, etc), you will need to write the algorithm that defines your AEC design problem yourself.

This algorithm is not a simple effort. Taking software like Grasshopper and Galapagos/Octopus/Design Space Exploration or Dynamo and Project Refinery, we need to both have the technical skills of using those tools, understand how to structure the algorithm to both generate what we want and evaluate it by some criteria. To properly choose inputs that are actually meaningful to the design study we intend executing and numerous other aspects have to be taken into consideration before a user can actually take that to a GD application.

As for the other aspect. I want to have a poke at emphasising goals and constraints as something different. All projects start in one form or another about identifying what has to be done and what issues will have to be overcome. These are goals and constraints, they are present at all stages of design at all levels, from project-wide to localized affecting only a single team. An actual AEC project has so many goals and constraints, that most don’t get documented at all, it would just not make sense. It’s not that much of a shift in the way designers think than it is presented. And, again, this is a good thing for the adoption of GD. Since at the core, most principles of how one needs to work with Generative Design are not all that different from what we have been doing in AEC. Just to clarify, the issue with highlighting goals and constraints now as something different is more about marketing, building hype. Feels like an attempt to target company leadership that does not always consist of technical people. There must be a better way to promote GD and I’m sure that we’ll get to see that soon.

One last visual comparison I want to make before moving on to the next section. The actual goals and constraints selected for GD must make sense in the scope and scale of the project. Too simple a problem and a semi optimal solution can be relatively clear from the start, too complex and it will be difficult to understand when to stop exploring the design space. I think the bottom figure illustrates this problem rather well.

The real constraints in AEC are time and resources

This critical consideration is worth its own section. There’s plenty of talk about how GD can greatly reduce the time it takes to interrogate multiple options over manual efforts. However, the significant time investment needed for Generative Design application is ignored. Bill Allen countered Daniel Davis’ point 2 about the quality over quantity aspects of GD options, where he wrote: “Now, imagine you don’t only have 8-10 hours, but an infinite amount of time to design an infinite amount of options for your project AND you are able to leverage data to help problem solve.”. I see what Bill means with this and the point is reasonable. Having the ability to interrogate more options over the same period of time can yield good results. Nonetheless, that sentence is slightly detached from the actual nature of AEC projects, that they are significantly limited by time and resources. Infinite time does not exist, and paradoxically, the deadlines are getting stricter and stricter for designers, while the total time needed to deliver a project keeps increasing.

I do agree that the time investments are debatable, depending on the problem in question and the team’s knowledge and skill levels. Which for Bill Allen’s and EvoleLab’s case doesn’t leave any doubt, I’m pretty confident they will tackle any and all problems coming their way. However, I would not want to generalize that to the entire AEC sector.

One chart I want to share expresses how I see Generative Design in relation to time. The figure below is a rough approximation of the timeframe it takes to deliver a design from concept to completion. It is all relative and it will differ on a case by case basis. Regardless, I feel that the upper chart is shrouded away from the eyes of most people in articles about GD. The actual timeframe and duration of GD implementation is really restricted so it does not affect the entire project in a detrimental way. The middle one represents what I see when I read various posts and watch videos about GD, it attempts to fabricate an illusion that it can replace a significant chunk of the design process and the time taken for GD is easily justifiable. Which is most certainly a rare case if there is one at present. How I see the future potential of optimization and design exploration for AEC is shown in the bottom diagram. You’ll notice that I’ve switched up the style of the diagram without a clear stop of the design process and added construction from the start. This is to illustrate the integrated project delivery nature we will likely be moving to over this and the next decades.

If GD is used to enable better decisions early on, such as the majority of present use cases. It is also important to remember, that the longer it takes to reach a decision the more value it has to add to the project. Hence, it is easy to get stuck in a loop of searching for that optimal solution rather than call it a day if the outcome does not bring as much value as expected. It can become a lot like gambling, where you need to play more to win back what was lost. This does not only apply to architects but engineers as well. Sometimes, rationality can be forgotten in the pursuit of the best engineering solution, that can be predestined to fail due to excessive costs involved. In general, the decision-making process is already tricky, since people tend to use up all the time they are allotted to come up with a decision even when they are pretty certain of their choice early on. But what if…

Previously I’ve discussed the need for the user to actually write the algorithm to generate the wanted designs or whatnot for Generative Design. On the upside, if we expand this algorithm, we can see that it is basically Computational Design automation, for example, a Dynamo or Grasshopper visual script. Since first we start with parametric modelling, deciding on key variables, fixed parameters that all lead to a design automation solution for something like Revit based BIM projects. We are probably 80% (totally accurate number) towards implementing Generative Design. It doesn’t take that much more effort to clarify the metrics, evaluators to use to get GD up and running to explore the design space for us automatically. But what if the design automation through Grasshopper, Dynamo already delivers most of the benefits to be had?

If there are plans to deploy Computational Design to automate certain design tasks for BIM modelling, data or other related purposes, Generative Design should seriously be given consideration. Maybe there is something to be gained from it? Alternatively, if there isn’t any plans or reasoning for using Computational Design on the project in question, GD is automatically out of the question.

Briefly, I also want to mention the importance of another resource and that is people. It is all too tempting to overlook how the AEC sector has become more integrated over time and what keeps it going in that direction. It all depends on people, often who are categorized as unbillable ones, such as BIM managers, coordinators, Design technologists, digitalization experts and many more. These people maintain the process together, supply the sector with most of the innovations. The narrower the tech, the more specialized people are needed for it. One area, such as BIM has once been very narrow as well, but now, most of the people supporting previous CAD-based workflows have transitioned to BIM. Hence, I would call BIM transformative, as it managed to replace great parts of the previous design process, as just adding on top. At present, specialized people, likely from Computational Design field will be needed to support most of the GD efforts.

Effects of Generative Design on creativity

I can see how Generative Design can affect the creativity of architects, as the design is not that easy to objectively evaluate. As Daniel Davis argued in his post, there is no consensus on what constitutes good architectural design. So, there is a risk that the things that are simple to quantify, such as economic factors or derivatives of that, can outweigh the other factors in the decision process when relying on GD to explore the design space. This can affect the “quality” of architecture.

I can compare the impact on design to the growing number of Reinforcement Concrete (RC) precast constructions. Yes, they are very efficient in terms of the design, construction process and most importantly cost. But I believe it is starting to have a negative effect on some architecture. Office and residential buildings are beginning to look way too similar lately, with only variations of façade design. Subjectively, precast RC has also taken away the fun of designing structures for engineers. The process has become more about detailing than actual engineering. Essentially converting many structural engineers into overqualified drafters/modellers. Generative Design has the potential for both, better design and lacklustre design if better metrics are not taken into consideration when exploring the design space for optimal designs.

The scale and scope of the problem is critical for the success of GD

The success of Generative Design is not guaranteed. I’ve elaborated on quite a few aspects of GD in previous sections, but this time, I want to direct your attention to the problem itself. The scope and scale of the problem can make or break the attempts to gain something from design exploration through GD. It also forms a barrier, in my opinion, for Generative Design to become more than just another Computational Design tool and evolve into something more, “a new way to approach design”.

The thoughts and examples I will discuss come purely from an engineering background, as I feel there are plenty of very great posts dealing with architectural implementations and examples. Furthermore, some engineering examples are better at explaining the complexity of a real-life project in our AEC world.

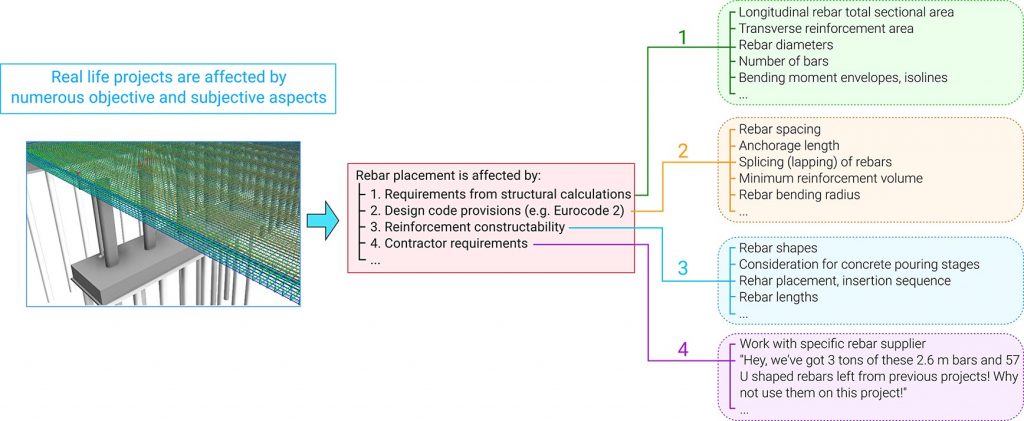

I will take a shot at deconstructing the reinforcement placement automation and optimization of RC structures. There are attempts floating around at developing various Neural Network and Generative Design based solutions for rebar modelling within BIM modelling platforms. The idea is noble. However, if we analyse the reinforcement problem, the first thing we will notice is how over-constrained the problem is. Not only it is affected by the structural analysis results – bending moments, forces acting on a slab, but also by the provisions of various design codes like Eurocode 2, the rebar constructability prerequisites like concrete casting stages, the sequence of rebar placements, but also various subjective requirements like requests coming in from the contractor, who, for example, has a few tons of 16 mm diameter rebars of various lengths laying around and wants the engineers to use them since that saves him a little bit on costs. You can optimize the rebar layout to your heart’s desire, but when a real-life situation occurs, maybe the selected rebar fabricator cannot make custom rebar shapes provided in the design and the whole optimization effort can fall apart in an instance.

Furthermore, structures are critical elements, reinforcement is part of that. Hence, it will have to be checked by an actual engineer. Even if you manage to optimize the rebar solution, someone will have to check it and tweak it to conform to the various written and unwritten rules and simplify the layouts. Personally, I see significantly more use of deploying rebar automation tools in the form of Dynamo scripts to generate the rebars for various structures as the engineer requires. First, it saves a step of moving forward to optimization, second, a well-developed automation tool will provide 95% of the efficiency that could possibly be gained. Continuing forward and optimizing for the remaining 5% will be wasteful. Everything past design automation scripting will yield radically diminishing result. One would need to identify where and when to stop. Overall, I am for efficiency-focused improvements through the usage of Computational Design. I have written a few posts with the underlying theme of efficiency both on this website and on LinkedIn. There is plenty of design process waste that can be cut through automation.

Now imagine we scale the problem significantly, from reinforcing some slab to reinforcing the entire floor of some 10k square meter shopping mall which is more complex than your everyday rectangular boxes. I believe Generative Design immediately becomes more appealing in terms of potential efficiency gains for all parties, the client – optimized costs, the engineer – reduced design/modelling time, the contractor – simpler arrangements. Provided we can actually optimize for those. The point is, the scale can have a great impact on the viability of putting in the effort needed for GD based optimization.

Another case I want to briefly touch on is both rebar placement and concrete element optimization through Generative Design. There is a nice post on Three Experiments in Generative Design with Project Refinery. One of those is exactly that, an RC structure with rebar placement, optimized using GD. It is excellent for what it is, a research project. Yet, I will borrow it to highlight a few other notions. Optimization for material saving, particularly in concrete is not a good decision unless done in scale. Concrete is cheap, what costs more are often the workers and equipment expenses to finish an RC structure. As an engineer, looking at the outcome of the optimization efforts in that article, the thoughts coming to my head are, that it is completely irrational and out of touch with reality. Assume we need formwork for this, a contractor would have a look at such drawings and immediately add various coefficients for “complex design”, “more man-hours required”, “the engineer’s a nut case” to charge more.

The real world can be quite harsh. Maybe the optimized rebar layout is so complex, it involves an additional day or two of work for the workers on-site to arrange and fix all the bars before pouring concrete. Maybe this leads to an unaccounted extra rental time of construction equipment, cranes, heavy trucks. Maybe other works get postponed for those two days because construction is mostly a sequential process. It all can quickly add up to significant expenses, instantly erasing any benefits had from material and rebar quantity reduction optimization. It might not always be apparent that in the real world, reducing the quantity of materials, for example, might yield the opposite effect for the entire project. Choosing the correct metrics and correct problem for design exploration is critical. If only someone could quantify what constitutes a correct problem and metrics…

As a fellow researcher, I understand the desire to explore the problem from all the angles the authors chose for their research. They utilized 3d printing, optimized for more than just load carrying capacity, but weight as well. Don’t want my comments above taken as too harsh. Overall, I do consider that specific work to be a quality effort for an R&D case, yet it perfectly suited to illustrate my points above.

Another example I want to discuss relates to something most undergraduate engineers have had to do during their studies, i.e. play around optimizing a truss structure. Optimizing the layout, steel profiles to reduce the weight of material required. Mostly oblivious to the fact that having 10 different steel profiles, several types of bolts might not be efficient in terms of final fabrication costs. Truss optimization is a rather common problem for structural engineers and there are plenty of tools to facilitate this. However, lately, I’ve noticed a few companies working on Generative Design based BIM solutions for truss optimization. I think simple optimization can get the job done well enough within most existing FEA software, but I applaud the efforts since every little bit of competition is needed in the AEC software realm.

Ultimately, what type of AEC engineering problems lends itself well to Generative Design? Those that consider the overall design as well. A good example could be cable-stayed or suspension bridges, particularly design exploration with shape-finding, pre- and post-tensioning of cables, RC elements, tower architecture, tower section optimization, deck optimization, etc. These types of problems lend themselves well to Generative Design in my opinion, as both the scope and scale of the problem are aligned. The problem is complex, multi-objective in nature, the scale is usually vast, as with many infrastructure projects. Moreover, the total time of such projects extends for many years. In general, I think, infrastructure projects would lend better to Generative Design for engineers, while residential and commercial developments for architects.

What I’m trying to say with these examples is that the Generative Design approach can be too simplistic for the real world of engineering in AEC. This isn’t mechanical engineering, where benefits from things like topological optimization can be quite profound. The problems that really matter in structural engineering are often related to large scale, complex projects. Where GD would be beneficial but might not be ready for them yet.

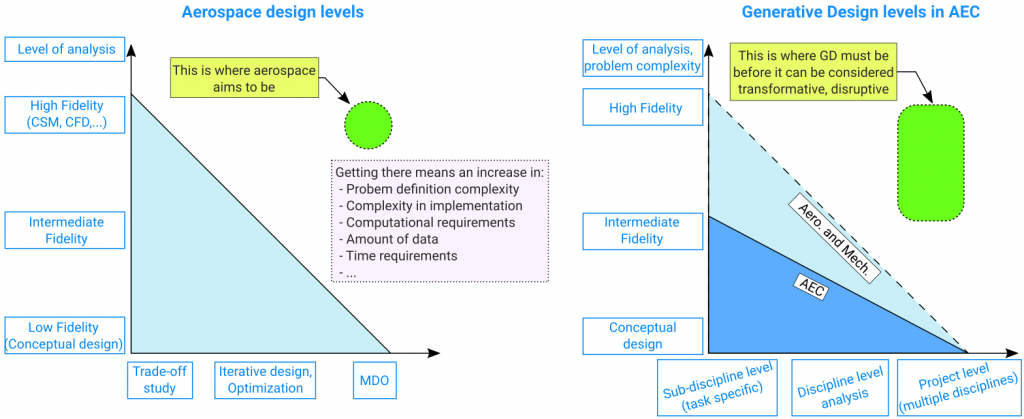

To finish this section off, I’ll pull up a figure with a comparison of GD with Multidisciplinary Design Optimization concept that I briefly wrote about in the introduction (I don’t blame if you’ve already forgotten about it, kudos for reading this far though!). The image on the left can be found on quite a few presentations related to MDO. At the core, the problem with MDO is what Generative Design is facing in my opinion, they both struggle to scale in the fidelity of problems they are trying to solve and the number of disciplines they try to cover. Some issues are purely related to available computational capacity, storage and problem definition but Generative Design also faces reduced R&D spending in AEC, stricter deadlines or just shorter project turnaround times than the aerospace sector.

Whether we will close the gap in modelling fidelity is an open question, as I see a paradox here. Model fidelity is relative, as the model fidelity increases due to advances in technology, so do the benchmarks we compare against. Our definition is not static, it evolves with time.

Seamless integration of Generative Design in the project workflow

I’ve seen cool things done with Generative Design, interesting solutions found from design exploration. But I’m with Daniel Davis on his 5th point, mostly on the aspects of work continuity. At present, GD is a semi dead-end. Once you get what you want from your implementation of GD, how well does it fit into your existing workflow and the present project phase you’re at? Can you actually continue work from there? How will you handle revisions? I don’t blame GD entirely for this, since with Grasshopper and/or Dynamo, you do have the option of investing a little bit more effort into how the design automation part of your script works, so the outcome is actually workable in your BIM applications. If using some other solution, does the output fit your workflow or necessitates altering it to accommodate this new tool in your arsenal?

Needless to say, the effort to ensure proper integration can be exhaustive in certain scenarios. It really is tricky to automate the output in such a way, where the obtained model is fully editable to all possible extents. Right now, most users do not think through how their script will work within the entire design process. If a serious revision comes along, their models require significant rework. Sometimes it is not the user’s fault as the problem itself is just that complex.

One example from personal experience, my colleague and I have developed a Dynamo workflow a few years ago to automate the Revit model of a simple single-span RC bridge (it can be seen on the front page of this website), typical over here on local roads. But it was different from what we saw others doing. Instead of using Dynamo to just generate geometry, we standardized the Reinforced Concrete bridge elements, pre-modelled some with standardized insertion points and connectors to allow for a skeleton approach to bridge generation. Where elements such as RC abutments, wings, beams, approach slabs, etc, would be either taken from a catalogue or generated with Dynamo based on a network of insertion points. This ensured work continuity in Revit, with easy modifications to elements becoming possible, including replacement of entire elements without breaking the model.

It all seems relatively simple, but most of the work carried out was not on parametrizing geometry within Dynamo but on ensuring the workability and seamless integration into an existing workflow. That required a clear understanding of the entire design process and all the possible viable outcomes of the automation solution beforehand. Generative Design faces this right now, whether it is based on Grasshopper or Dynamo + Project Refinery, or a stand-alone solution. Until continuity is addressed in a satisfactory manner, it will either slow down the progress of GD or completely halt it at some point. Everything must come into one of the core AEC design environments.

Just to add, that this is not only a problem of GD but also relates to Computational Design.

Where Generative Design has the most potential to shine

If you haven’t already noticed the prevailing theme throughout this post, then this section is just for that. To put it simply – decision-making processes are where the greatest benefits are to be had. Though, design space exploration must consist of quantifiable metrics that matter and be able to take advantage of the visual design exploration provided by a tool like Project Refinery, to yield insight on the outcomes that are not apparent just from the selected metrics. Luckily, this appears to be where the majority of GD applications are at. I do wonder, though, if for the same reasons I am thinking of.

Most problems outside of the Predesign and Schematic Design Stages require a significantly higher level of fidelity in problem definition, BIM models, data. Later stages also demand better integration into the existing project workflows, meaning the outcomes from Generative Design have to be tweakable manually without significant effort. Once that gets sorted out in a seamless and efficient manner, most of what I wrote here will become obsolete. Time will tell.

In the nearest future, GD could provide great value when paired with “correct” design problems. Ones that are comprised of multiple conflicting objectives have metrics included that are actually significant to the project, ones that do not demand extended implementation times and can be fitted into the design process without interrupting other activities.

What’s next?

GD will not be the last tech we see aimed at the design process. Plenty of inspiring work is being done by very smart people all around the industry, trying to come up with creative ways of solving AEC problems through innovations or resurrecting old tech for a new life in a brighter world.

What technologies will become highly talked about next, I am not sure. It could come from anywhere, surrogate modelling in the sense of response surface modelling, kriging, deep learning, reinforcement learning (Q learning), Generative Adversarial Networks. Speaking of which, there is already an interesting implementation done by Stanislas Chaillou. You can read a bit about it on ArchiGAN: a Generative Stack for Apartment Building Design.

While I’m sharing links, I also suggest checking out the references at the end of this post. You will find a few articles worth reading from Dieter Vermullen, The Computational Engineer blog, Lorenzo Villaggi and other sources related to Generative Design.

Summary

The thoughts I’ve conveyed in this article might seem critical of Generative Design, but in the end, I am more optimistic about its success. I do agree with pretty much all points posted by Daniel Davis on some level but don’t consider those points enough to stop the evolution of the present incarnation of Generative Design. I’m more aligned with Bill Allen’s position. Design exploration has been successfully deployed in other sectors a while back, but due to the slow nature of our AEC sector, we’ve only started rather recently. Access to an easy to use GD solution is now available in the new version of one commonly used BIM modelling software, so we’ll be seeing a significant boost in use cases being explored.

There are still plenty of aspects of GD to explore and evolve. If we want to look at Generative Design as transformative or even disruptive tech, it needs to:

- Be developed with better consideration for the present and future project delivery methods.

- Carefully consider the timeframes of AEC projects.

- Be open and transparent about the actual work needed to implement GD.

- Integrate seamlessly into the working environment of an AEC project to ensure continuity while maintaining the workability of the models.

- Aim for a more multidisciplinary approach, expanding from isolated tasks and cases, including both architecture, structure, MEP, etc.

- Simple to implement on such problems while increasing the fidelity of the models with minimal requirements for the user.

- Interrogated for how it will affect the creativity of architects and engineers, will aesthetic aspects of projects be compromised in turn for other metrics that are simpler to quantify?

- Be further explored in terms of identifying the most suitable problems and metrics to use.

- Replace parts of the present design process and transfer people over to this technology from other areas instead of requiring new people to support the technology. I consider this to determine if the tech has really transformative potential or is just another tool in the design toolbox.

These notions should also be kept in perspective when dealing with GD. Even if GD doesn’t achieve all or most of them, I am positive that design space exploration is not going anywhere. We’ll continue to see Generative Design implementations in AEC improving and even if it does not escape the level of being just another Computational Design tool, it will still have its uses.

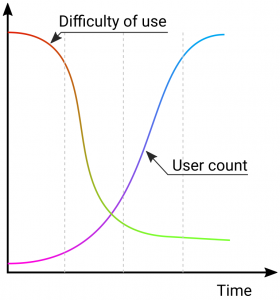

The one thing that I do consider to be critical of is the overhyping of technologies. Right now, I consider GD to be overhyped, the potential is there as I’ve discussed but it needs to be fostered gradually and adapted to AEC specifics. AEC is very slow to change, is largely risk-averse and has limited R&D spending compared to other sectors. This should not be forgotten, and technologies shouldn’t be rushed, or risk being forgotten due to failure of meeting expectations. I have my own version of the Gartner hype cycle shown in the figure below to help with my point.

Overhyping can have detrimental effects for the technology in question since after the hype fades, it will drop into what is called the through of disillusionment. In my version, you’ll also notice the abandonware pit, a term I really liked for its meaning, though, can’t locate where I’ve seen it first. The idea is, if the drop is too deep, there is a significant risk that many projects, startups will not survive that rely on the technology in question to reach the Plateau of productivity. And the technology in question could end up being discarded in favour of another trendy one during that time. This has already happened to Generative Design, as the present iteration is not the first, but rather a more mature and improved one, with great user experience and simplicity of use compared to previous ones. Not to mention the expanded standalone solutions tailored to specific problems.

I am for sustainable growth of innovations, maintaining consistent progress over time and remaining semi-attached to the real world, being open and transparent about all its aspects. I understand this doesn’t sell well, but I’m an engineer, a BIM guy not a sales guy. In other words, we need to flatten the curve!

Lastly, I want to thank you for taking the time to read this article. I understand, it has likely taken a significant chunk of your day, for which I am truly grateful. My hope is that you found this enlightening, interesting, informative and worthy of a debate. If you have any thoughts or suggestions, found some problems or would like to share some critique, please head over to my LinkedIn, where I’ve posted this, or just drop me an email if you don’t want it public.

References

- https://www.danieldavis.com/generative-design-doomed-to-fail/

- https://www.evolvebim.com/post/is-generative-design-doomed-to-fail/

- https://parametricmonkey.com/2019/11/27/understanding-innovation/

- https://parametricmonkey.com/2020/03/17/identifying-innovation-opportunities/

- https://provingground.io/2019/11/19/free-generative-design-a-brief-overview-of-tools-created-by-the-grasshopper-community/

- https://blogs.autodesk.com/revit/2019/12/16/three-experiments-in-generative-design-with-project-refinery/

- https://devblogs.nvidia.com/archigan-generative-stack-apartment-building-design/

- https://www.autodesk.com/autodesk-university/article/Using-Generative-Design-in-Construction-Applications?linkId=80426650

- https://www.thecomputationalengineer.com/5-reasons-why-the-aec-industry-needs-an-open-source-common-language/

- https://medium.com/autodesk-university/geometry-systems-for-aec-generative-design-codify-design-intents-into-the-machine-9bd9ccec8def

- https://www.mckinsey.com/industries/capital-projects-and-infrastructure/our-insights/imagining-constructions-digital-future

- https://www.mckinsey.com/industries/capital-projects-and-infrastructure/our-insights/seizing-opportunity-in-todays-construction-technology-ecosystem

Contact Us

If you would like to get in touch with us, you can do so by filling the form below and we will get back to you as soon as possible. Alternatively, you can email us directly at: